Why experimentation of AI products without economic intelligence is a strategic mistake

By

John Kim

•

Dec 2, 2025

Why experimentation of AI products & features without economic intelligence is a strategic mistake

And why companies need cost, value and margin visibility & optimization while still experimenting.

It seems as though every SaaS company is experimenting with AI right now. They’re adding features and capabilities, agentic workflows, gauging demand, ROI (for themselves and for their customers), and searching for the right pricing and monetization strategies.

I hear repeatedly the same thing:

“We’re still experimenting with AI features and products. Figuring out the pricing model(s), taking into account the move to usage / outcome based pricing and also how our company is valued by Wall Street. We’ll worry about margin optimization and intelligence next in 6-12 months.”

Or:

“We’re currently just focused on growth at all costs. We’re in land grab mode. We’re not worried about our costs or margins, yet.”

Here’s the uncomfortable truth:

By the time all of this is sorted, 80% of the margin, pricing, and architectural decisions are already locked in, and in many cases wrong decisions were made.

The experimentation phase is where the hidden risks and economic traps get created. It’s also when sub-optimal pricing strategies are deployed, leaving money on the table.

The myth: “We’ll optimize margins later.”

This is the #1 issue that comes back to haunt companies in the agentic era

Experimentation feels simple and flexible. A few prompts here, adding agents, a RAG prototype, a vector search trial… but underneath the surface, there are three irreversible things already happening:

Usage patterns are forming

Early experiments define:

What data gets retrieved

How often agents call each other

How tokens compound across workflows

What context windows need to be

How many retries & fallbacks you build into orchestration

You won’t change these behaviors once customers adopt them.

Product expectations are being set

If your beta or early adopters get used to a “magical” but expensive workflow, you will either bleed margin or degrade the experience later and appear to regress—neither of these options are good.

Architecture becomes path-dependent

Today’s AI product decisions will determine:

Your deployment model

Your caching strategy

Your provider mix

Your retrieval depth

Your re-ranking strategy

Your context windows

Your agent combinatorics

Once shipped, these choices become difficult, time-consuming, and costly to change.

The AI experiment trap: The illusion of knowing your AI costs

Most product teams believe they understand their AI economics, as initially they see small invoices from their LLM providers and assume it’ll scale linearly, and since they are pricing their products well above those costs, that they’ll be fine.

They believe they understand their AI economics because they can see their total LLM spend. The total number of API calls. From here they can calculate a rough average cost per user and blend the token cost across models.

They assume that since their prices far exceed the initial costs that they see, they’re fine. Or they apply caps, potentially impacting customer value. This feels logical, however, it is dangerously misleading.

Aggregate LLM cost tells you nothing about what matters:

Cost per agent

Cost per workflow

Cost per feature

Cost per customer

Cost per segment

Cost per retrieval depth

Cost per context window

Cost per fallback path

Cost per agent cascade

Every one of those variables can 10x the cost curve, and these details don’t show up in the monthly invoices they receive from their LLM providers.

This is why companies get blindsided. Not because they’re not monitoring cost, but because they’re only monitoring aggregate cost, which tells them nothing about the economics that matter.

They also don’t take into consideration:

Impact of spam attacks

Impact of prompt injection attacks

Usage spikes

Impact of bad prompts

Impact of successful caching

Alternative LLM costs

Impact of prompt padding

Impact of prompt & model compression

Internal vs external user skew

Multi-agent inflation

With proper strategies in place, these can greatly increase or even decrease the costs, making a huge impact to ROI.

This is how companies fool themselves into thinking their monetization model will work, only to discover later that:

The Pareto Principle is in effect: 20% of customers account for 80% of the costs

A single agent chain can destroy their gross margins

One workflow ends up costing 10x more than what they assumed

One fallback loop doubles token usage

One segment becomes completely unprofitable

Enterprise customers exploit edge cases

Context window bloat erasers margins

Retrieval depth scales unpredictably

A new feature multiplies cost across all other features

Spam attacks and irrelevant prompts are common and recurring events driving up costs by 20-40%

Prompt injection attacks can lead to IP loss, data loss and fines

And that AI costs do not scale linearly. They compound.

AI features don’t behave like traditional SaaS features. They exponentially scale with inference, API calls, model chain calls, etc. They behave like economic fractals. A single prompt becomes 12 model calls, a single workflow becomes 4 agents, an agent triggers a chain of 30 decisions, small increases in retrieval depth doubles the cost, token usage explodes, etc.

Too many companies come to these realizations too late. Just ask Microsoft as, according to the Wall Street Journal, they discovered their cost for their $10/user/month for their Github co-pilot, on average was costing them $30/user/month, with some users costing $80/user to serve.

This is why cost, attribution and optimization must be part of your AIOps strategy, while you experiment, not during beta and for sure not after product launch. Without it, your new products may have a negative margin, or you may have been able to make some products profitable that you decided not to launch, by optimizing the costs.

Why you can’t set pricing or monetization strategy without economic visibility

If you don’t know the cost per customer, you don’t know your true margins

If you don’t know your true margins, you can’t measure value

If you can’t measure value correctly, you can’t price correctly

If you can’t price correctly, you can’t scale AI profitably

Today, teams are guestimating usage, estimating costs. They’re applying arbitrary caps to give them a feeling of safety. They assume costs will scale linearly and hoping that margins will hold.

Hope is not a monetization strategy. AI usage does not scale like SaaS usage. It scales like agent combinatorics. It’s messy, unpredictable…non linear. Without visibility, companies are flying blind.

Companies are trying to make fundamental decisions like:

Should AI be usage-based, outcome based, flat-rate or hybrid?

Should there be limits / caps? If so, what should the limit be and will it reduce customer value?

Should this feature be premium, add-on or included with core products?

Should there be volume discounts?

Should there be usage / usage commitment tiers?

Can we mitigate against unprofitable usage patterns?

What should the margin target be per workflow?

These questions cannot be answered without knowing:

Cost per workflow

Cost per agent chain

Cost per customer segment

Cost per model

Cost per context window

Cost vs value delivered

This leads to surprise margin erosion. Reasons become guess work, as do the solutions. Which leads to uncomfortable questions at the next board meeting. When experimenting, once granular costs are known, combined with predictable usage patterns and compared to value, the pricing strategy / model to maximize revenue will become clear. Not to mention the blueprint of how to map value to price for your customers.

Economic intelligence during experimentation is paramount

If you’re building AI features and products, the experimentation phase is where your business model is created.

You need visibility into:

Cost per agent and per workflow

Which chains are efficient?

Which explode under load?

Cost per feature

Which experiments are viable?

Which workflows kill margin at scale?

Cost per customer

Which segments will consume the most usage?

Which industries?

Cost variance across LLMs & providers?

Which models offer the right blend of quality, performance and economics?

Should I use more than one?

Usage trends

Should we put in usage caps?

Context size limits?

Throttle?

Companies need to know what profitable consumption actually looks like. You can’t design pricing around averages, you must design around distribution tails. This is not possible, without economic intelligence.

Simulating before you ship

The smartest teams are now running simulations during experimentation

They are:

Comparing LLM providers

Measuring model quality vs cost vs latency

Evaluating caching strategies

Testing retrieval depth

Simulating worst-case agent cascades

Forecasting cost per customer segment

Pressure testing premium vs base tier features

Understanding how cost changes with scale, and with the addition of each new feature / product

This is how you build AI products that scale, while maintaining a healthy margin.

Where Payloop fits in

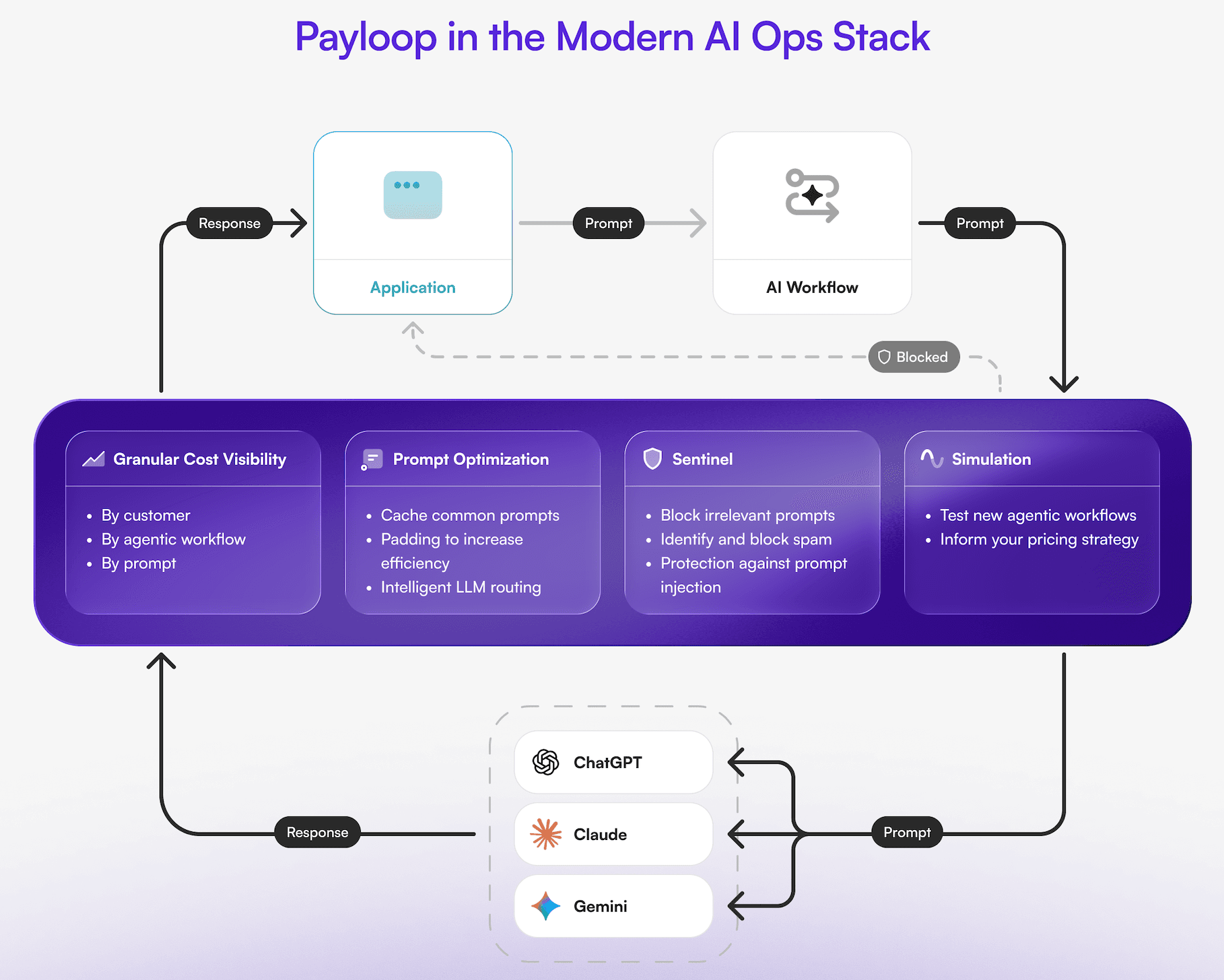

Payloop provides the Economic Intelligence Layer that is missing from today’s AIOps and observability stacks.

We enable teams to:

Track token and cost per agent, workflow, customer, feature

Map multi-agent cascades

Analyze cost per feature

Model cost curves by segment

Simulate architecture and models

Compare LLM providers based on economics and quality

Identify expensive behaviors during experiments

Design pricing strategies based on data

Ensure healthy margin profiles

With our Sentinel product we also add:

Highlight wasted spend

Irrelevant prompt blocking

Auto-Caching

Prompt padding

Real time LLM routing

LLM simulation

Recommend pricing models & strategies

On average Sentinel customers reduce AI spend by over 64%.

This isn’t just optimization. This is monetization infrastructure for the AI era. You can’t experiment intelligently without it.

Closing thoughts

The companies that win, in this AI era won’t be the ones who build the flashiest features

The companies that will, will be the ones that understand their economics early enough to design:

Sustainable Architectures

Profitable Workflows

Predictable Usage Patterns

Scalable, Healthy Margins

Smart Pricing and Packaging

AI experimentation is where the economics are first created…and where the biggest mistakes happen.

Economic intelligence and margin optimization is no longer optional. It’s foundational.